nsight compute что это

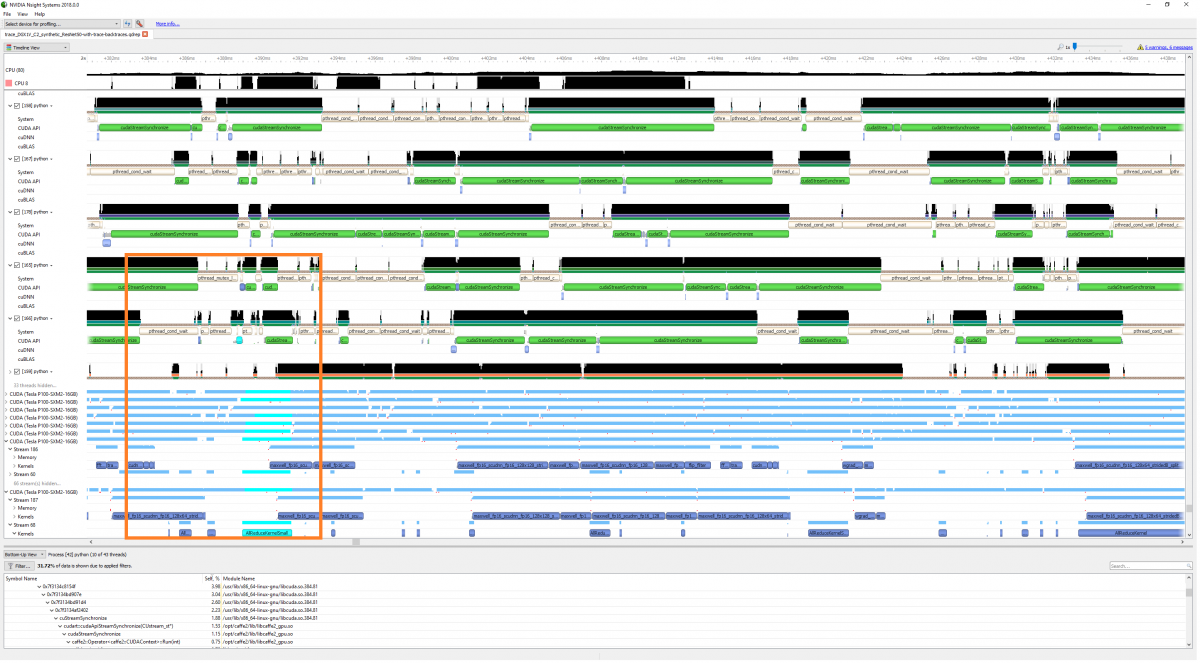

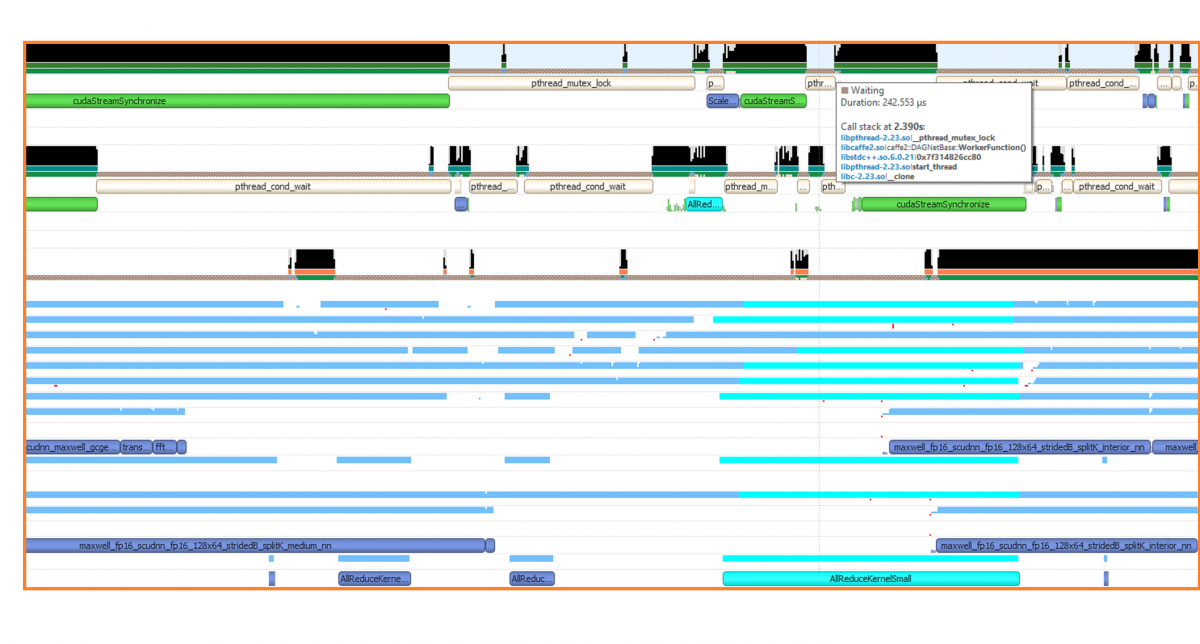

NVIDIA Nsight Systems

NVIDIA® Nsight™ Systems is a system-wide performance analysis tool designed to visualize an application’s algorithms, help you identify the largest opportunities to optimize, and tune to scale efficiently across any quantity or size of CPUs and GPUs; from large server to our smallest SoC.

Overview

NVIDIA Nsight Systems is a low overhead performance analysis tool designed to provide nsights developers need to optimize their software. Unbiased activity data is visualized within the tool to help users investigate bottlenecks, avoid inferring false-positives, and pursue optimizations with higher probability of performance gains. Users will be able to identify issues, such as GPU starvation, unnecessary GPU synchronization, insufficient CPU parallelizing, and even unexpectedly expensive algorithms across the CPUs and GPUs of their target platform. It is designed to scale across a wide range of NVIDIA platforms such as: large Tesla multi-GPU x86 servers, Quadro workstations, Optimus enabled laptops, DRIVE devices with Tegra+dGPU multi-OS, and Jetson. NVIDIA Nsight Systems can even provide valuable insight into the behaviors and load of deep learning frameworks such as PyTorch and TensorFlow; allowing users to tune their models and parameters to increase overall single or multi-GPU utilization.

Platforms

Learn about Nsight Systems on your platform:

Release Highlights

Downloads

Available for profiling directly on Linux workstations and servers, including the NVIDIA DGX line, or remotely from a variety of hosts: Windows, Linux, or MacOSX.

Documentation

Support

To provide feedback, request additional features, or report support issues, please use the Developer Forums.

System Requirements

Supported target operating systems for data collection:

Supported target hardware

Supported target software

Supported host operating systems for data visualization:

Release Highlights

Downloads

Available for profiling directly on Linux workstations and servers, including the NVIDIA DGX line, or remotely from a variety of hosts: Windows, Linux, or MacOSX.

Visual Studio Integration*requires Nsights Sytems to be installed

Documentation

Support

To provide feedback, request additional features, or report support issues, please use the Developer Forums.

System Requirements

Supported operating systems

Supported target hardware

Supported target software

Release Highlights

2019.4

2019.3

Downloads

Nsight Systems is bundled as part of the following product development suites:

Documentation

Support

To provide feedback, request additional features, or report support issues, please use the Developer Forums.

System Requirements

Supported Target Hardware

Supported target operating systems for data collection:

Supported host operating systems for data visualization:

Features

Learn about feature support per target platform group

| Feature | Linux Workstations and Servers | Windows Workstations and Gaming PCs | Jetson Autonomous Machines | DRIVE Autonomous Vehicles |

|---|---|---|---|---|

| View system-wide application behavior across CPUs and GPUs | ||||

| CPU cores utilization, process, & thread activities | yes | yes | yes | yes |

| CPU thread periodic sampling backtraces | yes* | no | yes | yes |

| CPU thread blocked state backtraces | yes** | yes | yes | yes |

| CPU performance counter sampling | no | no | yes | yes |

| GPU workload trace | yes | yes | yes | yes |

| GPU context switch trace | no | no | yes | yes |

| SOC hypervisor trace | — | — | — | yes |

| SOC memory bandwidth sampling | — | — | yes | yes |

| SOC Accelerators trace | — | — | Xavier | Xavier |

| OS Event Trace | ftrace | ETW | ftrace | ftrace |

| Investigate CPU-GPU interactions and bubbles | ||||

| User annotations API trace NVIDIA Tools Extension API (NVTX) | yes | yes | yes | yes |

| CUDA API | yes | yes | yes | yes |

| CUDA libraries trace (cuBLAS, cuDNN & TensorRT) | yes | no | yes | yes |

| OpenGL API trace | yes | yes | yes | yes |

| Vulkan API trace | yes | yes | no | no |

| Direct3D12, Direct3D11, DXR, & PIX APIs | — | yes | — | — |

| OptiX | 7.1+ | 7.1+ | — | — |

| Bidirectional correlation of API and GPU workload | yes | yes | yes | yes |

| Identify GPU idle and sparse usage | yes | yes | yes | yes | Multi-GPU Graphics trace | — | Direct3D12 | — | — |

| Ready for big data | ||||

| Fast GUI capable of visualizing in excess of 10 million events on laptops | yes | yes | yes | yes |

| Additional command line collection tool | yes | no | no | no |

| NV-Docker container support | yes | — | — | — |

| NVIDIA GPU Cloud support | yes | — | — | — |

| Minimum user privilege level | user | administrator | root | root |

* On Intel Haswell and newer CPU architecture

** Only with OS runtime trace enabled. Some syscalls such as handcrafted assembly may be missed. Backtraces may only appear if time threasholds are exceeded.

What Users Are Saying

Tracxpoint

We noticed that our new Quadro P6000 server was ‘starved’ during training and we needed experts for supporting us. NVIDIA Nsight Systems helped us to achieve over 90 percent GPU utilization. A deep learning model that previously took 600 minutes to train, now takes only 90.

Felix Goldberg, Chief AI Scientist, Tracepoint

NVIDIA

I used Nsight Systems to analyze our internal system and built a plan for optimizing both CPU and GPU usage, with significant performance and resource gains ultimately achieved to both. Overall, there is no alternative tool like Nsight which helps me to extract only, and exactly what I need to understand resource usage.

Sang Hun Lee, System Software Engineer, NVIDIA

NIH Center for Macromolecular Modeling and Bioinformatics at University of Illinois at Urbana-Champaign

Watch John Stone, present how he achieved over a 3x performance increase in VMD; a popular tool for analyzing large biomolecular systems.

Related Media

The 2019.6 release aims to provide a more detailed data collection, exploration, and collection control for all markets ranging from high performance computing to visual effects. 2019.6 introduces new data sources, improved visual data navigation, expanded CLI capabilities, extended export coverage and statistics.

NVIDIA Nsight Systems 2020.1 release adds CLI support for Power9 architecture. The ability to run multiple recording sessions simultaneously in CLI. UX improvements and stats export options in the GUI and CLI.

In the 2020.3 release, Nsight Systems adds ability to analyze applications parallelized using OpenMP.

In the 2019.3 release, Nsight Systems adds the ability to analyze reports using statistics to identify opportunities for improving your GPU-accelerated application.

The 2019.4 release aims to provide a more detailed data collection, exploration, and collection control for all markets ranging from high performance computing to visual effects. 2019.4 introduces new data sources, improved visual data navigation, expanded CLI capabilities, extended export coverage and statistics.

In the 2019.3 release, Nsight Systems adds the ability to trace vulkan on Windows and Linux targets; allowing you to inspect the CPU/GPU relationship and solve complicated frame stuttering issues in your Vulkan application.

Watch John Stone, of the NIH Center for Macromolecular Modeling and Bioinformatics at University of Illinois at Urbana-Champaign, discuss how he achieved over a 3x performance increase of VMD, a popular tool for analyzing large biomolecular systems.

In the drone industry, the weight and size of the main board is critical. With the ZED stereo camera by Stereolabs, developers can capture the world in 3D and map 3D models of indoor and outdoor scenes up to 20 meters. The small form factor of the Jetson TX1 enables Stereolabs to bring advanced computer vision capabilities to smaller and smaller systems. See what is possible when these two technologies come together in drones to power the latest virtual reality applications.

An introduction to the latest NVIDIA System Profiler. Includes an UI workthrough and setup details for NVIDIA System Profiler on the NVIDIA Jetson Embedded Platform. Download and learn more here.

NVIDIA Nsight Systems now includes support for tracing NCCL (NVIDIA Collective Communications Library) usage in your CUDA application. Download and learn more here.

NVIDIA® Nsight™ Systems is an indispensable system-wide performance analysis tool, designed to help developers tune and scale software across CPUs and GPUs. Download and learn more here.

Nsight compute что это

The user manual for NVIDIA Nsight Compute.

NVIDIA Nsight Compute (UI) user manual. Information on all views, controls and workflows within the tool. Description of PC sampling metrics and shipped section files.

1. Introduction

1.1. Overview

This document is a user guide to the next-generation NVIDIA Nsight Compute profiling tools. NVIDIA Nsight Compute is an interactive kernel profiler for CUDA applications. It provides detailed performance metrics and API debugging via a user interface and command line tool. In addition, its baseline feature allows users to compare results within the tool. NVIDIA Nsight Compute provides a customizable and data-driven user interface and metric collection and can be extended with analysis scripts for post-processing results.

2. Quickstart

2.1. Interactive Profile Activity

Use the window to step the calls into the instrumented API. The dropdown at the top allows switching between different CPU threads of the application. (F11), (F10), and (Shift + F11) are available from the menu or the corresponding toolbar buttons. While stepping, function return values and function parameters are captured.

To quickly isolate a kernel launch for profiling, use the button in the toolbar of the window to jump to the next kernel launch. The execution will stop before the kernel launch is executed.

Once the execution of the target application is suspended at a kernel launch, additional actions become available in the UI. These actions are either available from the menu or from the toolbar. Please note that the actions are disabled, if the API stream is not at a qualifying state (not at a kernel launch or launching on an unsupported GPU). To profile, press and wait until the result is shown in the Profiler Report. Profiling progress is reported in the lower right corner status bar.

allows to configure the collection of a set of profile results at once. Each result in the set is profiled with varying parameters. Series are useful to investigate the behavior of a kernel across a large set of parameters without the need to recompile and rerun the application many times.

For a detailed description of the options available in this activity, see Interactive Profile Activity.

2.2. Non-Interactive Profile Activity

The tab allows you to select which sections should be collected for each kernel launch. Hover over a section to see its description as a tool-tip. To change the sections that are enabled by default, use the Sections/Rules Info tool window.

For a detailed description of the options available in this activity, see Profile Activity.

2.3. Navigate the Report

The profile report comes up by default on the page. You can switch between different Report Pages of the report with the dropdown labeled Page on the top-left of the report. A report can contain any number of results from kernel launches. The dropdown allows switching between the different results in a report.

Use the entry from the dropdown button, the Profile menu or the corresponding toolbar button to remove all baselines. For more information see Baselines.

On the page some sections may provide rules. Press the to execute an individual rule. The button on the top executes all available rules for the current result in focus. Rules can be user-defined too. For more information see the Customization Guide.

3. Connection Dialog

Use the to launch and attach to applications on your local and remote platforms. Start by selecting the for profiling. By default (and if supported) your local platform will be selected. Select the platform on which you would like to start the target application or connect to a running process.

When using a remote platform, you will be asked to select or create a in the top drop down. To create a new connection, select and enter your connection details. When using the local platform, will be selected as the default and no further connection settings are required. You can still create or select a remote connection, if profiling will be on a remote system of the same platform.

Depending on your target platform, select either or to launch an application for profiling on the target. Note that will only be available if supported on the target platform.

Select to attach the profiler to an application already running on the target platform. This application must have been started using another NVIDIA Nsight Compute CLI instance. The list will show all application processes running on the target system which can be attached. Select the refresh button to re-create this list.

3.1. Remote Connections

NVIDIA Nsight Compute supports both password and private key authentication methods. In this dialog, select the authentication method and enter the following information:

In addition to keyfiles specified by path and plain password authentication, NVIDIA Nsight Compute supports keyboard-interactive authentication, standard keyfile path searching and SSH agents.

When all information is entered, click the button to make use of this new connection.

Note that once either activity type has been launched remotely, the tools necessary for further profiling sessions can be found in the on the remote device.

On Linux and Mac host platforms, NVIDIA Nsight Compute supports SSH remote profiling on target machines which are not directly addressable from the machine the UI is running on through the ProxyJump and ProxyCommand SSH options.

These options can be used to specify intermediate hosts to connect to or actual commands to run to obtain a socket connected to the SSH server on the target host and can be added to your SSH configuration file.

Note that for both options, NVIDIA Nsight Compute runs external commands and does not implement any mechanism to authenticate to the intermediate hosts using the credentials entered in the Connection Dialog. These credentials will only be used to authenticate to the final target in the chain of machines.

A common way to authenticate to the intermediate hosts in this case is to use a and have it hold the private keys used for authentication.

Since the is used, you can also use the mechanism to handle these authentications in an interactive manner.

It might happen on slow networks that connections used for remote profiling through SSH time out. If this is the case, the ConnectTimeout option can be used to set the desired timeout value.

For more information about available options for the and the ecosystem of tools it can be used with for authentication refer to the official manual pages.

3.2. Interactive Profile Activity

The activity allows you to initiate a session that controls the execution of the target application, similar to a debugger. You can step API calls and workloads (CUDA kernels), pause and resume, and interactively select the kernels of interest and which metrics to collect.

This activity does currently not support profiling or attaching to child processes.

Collect the CPU-sided Call Stack at the location of each profiled kernel launch.

Collect NVTX information provided by the application or its libraries. Required to support stepping to specific NVTX contexts.

Ignore calls to cu(da)ProfilerStart or cu(da)ProfilerStop made by the application.

Enables profiling from the application start. Disabling this is useful if the application calls cu(da)ProfilerStart and kernels before the first call to this API should not be profiled. Note that disabling this does not prevent you from manually profiling kernels.

3.3. Profile Activity

The activity provides a traditional, pre-configurable profiler. After configuring which kernels to profile, which metrics to collect, etc, the application is run under the profiler without interactive control. The activity completes once the application terminates. For applications that normally do not terminate on their own, e.g. interactive user interfaces, you can cancel the activity once all expected kernels are profiled.

All remaining options map to their command line profiler equivalents. See the Command Line Options for details.

3.4. Reset

Entries in the connection dialog are saved as part of the current project. When working in a custom project, simply close the project to reset the dialog.

4. Main Menu and Toolbar

Information on the main menu and toolbar.

4.1. Main Menu

When disabled, all CPU threads are enabled and continue to run during stepping or resume, and all threads stop as soon as at least one thread arrives at the next API call or launch. This also means that during stepping or resume the currently selected thread might change as the old selected thread makes no forward progress and the API Stream automatically switches to the thread with a new API call or launch. When enabled, only the currently selected CPU thread is enabled. All other threads are disabled and blocked.

Stepping now completes if the current thread arrives at the next API call or launch. The selected thread never changes. However, if the selected thread does not call any further API calls or waits at a barrier for another thread to make progress, stepping may not complete and hang indefinitely. In this case, pause, select another thread, and continue stepping until the original thread is unblocked. In this mode, only the selected thread will ever make forward progress.

4.2. Main Toolbar

The main toolbar shows commonly used operations from the main menu. See Main Menu for their description.

4.3. Status Banners

Status banners are used to display important messages, such as profiler errors. The message can be dismissed by clicking the ‘X’ button. The number of banners shown at the same time is limited and old messages can get dismissed automatically if new ones appear. Use the window to see the complete message history.

5. Tool Windows

5.1. API Statistics

The window is available when NVIDIA Nsight Compute is connected to a target application. It opens by default as soon as the connection is established. It can be re-opened using from the main menu.

Whenever the target application is suspended, it shows a summary of tracked API calls with some statistical information, such as the number of calls, their total, average, minimum and maximum duration. Note that this view cannot be used as a replacement for Nsight Systems when trying to optimize CPU performance of your application.

The button deletes all statistics collected to the current point and starts a new collection. Use the button to export the current statistics to a CSV file.

5.2. API Stream

window is available when NVIDIA Nsight Compute is connected to a target application. It opens by default as soon as the connection is established. It can be re-opened using from the main menu.

Whenever the target application is suspended, the window shows the history of API calls and traced kernel launches. The currently suspended API call or kernel launch (activity) is marked with a yellow arrow. If the suspension is at a subcall, the parent call is marked with a green arrow. The API call or kernel is suspended before being executed.

For each activity, further information is shown such as the kernel name or the function parameters () and return value (). Note that the function return value will only become available once you step out or over the API call.

Use the dropdown to switch between the active threads. The dropdown shows the thread ID followed by the current API name. One of several options can be chosen in the trigger dropdown, which are executed by the adjacent button. resumes execution until the next kernel launch is found in any enabled thread. resumes execution until the next API call matching is found in any enabled thread. resumes execution until the next start of an active profiler range is found. Profiler ranges are defined by using the cu(da)ProfilerStart/Stop API calls. resumes execution until the next stop of an active profiler range is found. The dropdown changes which API levels are shown in the stream. The button exports the currently visible stream to a CSV file.

5.3. Baselines

tool window can be opened by clicking the entry in the menu. It provides a centralized place from which to manage configured baselines. (Refer to Baselines, for information on how to create baselines from profile results.)

The baseline visibility can be controlled by clicking on the check box in a table row. When the check box is checked, the baseline will be visible in the summary header as well as all graphs in all sections. When unchecked the baseline will be hidden and will not contribute to metric difference calculations.

The baseline color can be changed by double-clicking on the color swatch in the table row. The color dialog which is opened provides the ability to choose an arbitrary color as well as offers a palette of predefined colors associated with the stock baseline color rotation.

The baseline name can be changed by double-clicking on the column in the table row. The name must not be empty and must be less than the as specified in the options dialog.

The z-order of a selected baseline can be changed by clicking the and buttons in the tool bar. When a baseline is moved up or down its new position will be reflected in the report header as well as in each graph. Currently, only one baseline may be moved at a time.

The selected baselines may be removed by clicking on the button in the tool bar. All baselines can be removed at once by clicking on the button, from either the global tool bar or the tool window tool bar.

Baseline information can be loaded by clicking on the button in the tool bar. When a baseline file is loaded, currently configured baselines will be replaced. A dialog will be presented to the user to confirm this operation when necessary.

5.4. NVTX

Use the dropdown in the API Stream window to change the currently selected thread. NVIDIA Nsight Compute supports NVTX named resources, such as threads, CUDA devices, CUDA contexts, etc. If a resource is named using NVTX, the appropriate UI elements will be updated.

5.5. Resources

The window is available when NVIDIA Nsight Compute is connected to a target application. It shows information about the currently known resources, such as CUDA devices, CUDA streams or kernels. The window is updated every time the target application is suspended. If closed, it can be re-opened using from the main menu.

Using the dropdown on the top, different views can be selected, where each view is specific to one kind of resource (context, stream, kernel, …). The edit allows you to create filter expressions using the column headers of the currently selected resource.

The resource table shows all information for each resource instance. Each instance has a unique ID, the when this resource was created, its handle, associated handles, and further parameters. When a resource is destroyed, it is removed from its table.

5.6. Sections/Rules Info

The Section Sets view shows all available section sets. Each set is associated with a number of sections. You can choose a set appropriate to the level of detail for which you want to collect performance metrics. Sets which collect more detailed information normally incur higher runtime overhead during profiling.

When enabling a set in this view, the associated sections are enabled in the view. When disabling a set in this view, the associated sections in the view are disabled. If no set is enabled, or if sections are manually enabled/disabled in the view, the entry is marked active to represent that no section set is currently enabled. Note that the default set is enabled by default.

Whenever a kernel is profiled manually, or when auto-profiling is enabled, only sections enabled in the Sections/Rules view are collected. Similarly, whenever rules are applied, only rules enabled in this view are active.

The enabled states of sections and rules are persisted across NVIDIA Nsight Compute launches. The button reloads all sections and rules from disk again. If a new section or rule is found, it will be enabled if possible. If any errors occur while loading a rule, they will be listed in an extra entry with a warning icon and a description of the error.

Use the and checkboxes to enable or disable all sections and rules at once. The Filter text box can be used to filter what is currently shown in the view. It does not alter activation of any entry.

The table shows sections and rules with their activation status, their relationship and further parameters, such as associated metrics or the original file on disk. Rules associated with a section are shown as children of their section entry. Rules independent of any section are shown under an additional entry.

When a section or rule file is modified, the entry in the column will show to reflect that it has been modified from its default state. When a row is selected, the button will be enabled. Clicking the button will restore the entry to its default state and automatically the sections and rules.

6. Profiler Report

The profiler report contains all the information collected during profiling for each kernel launch. In the user interface, it consists of a header with general information, as well as controls to switch between report pages or individual collected launches. By default, the report starts with the page selected.

6.1. Header

The dropdown can be used to switch between the available report pages, which are explained in detail in the next section.

The dropdown can be used to switch between all collected kernel launches. The information displayed in each page commonly represents the selected launch instance. On some pages (e.g. ), information for all launches is shown and the selected instance is highlighted. You can type in this dropdown to quickly filter and find a kernel launch.

The button open the filter dialog. You can use more than one filter to narrow down your results. On the filter dialog, enter your filter parameters and press OK button. The dropdown will be filtered accordingly. Select the arrow dropdown to access the button, which removes all filters.

Note that not all functions are available on all pages.

6.2. Report Pages

Use the dropdown in the header to switch between the report pages.

6.2.1. Session Page

This page contains basic information about the report and the machine, as well as device attributes of all devices for which launches were profiled. When switching between launch instances, the respective device attributes are highlighted.

6.2.2. Summary Page

The page shows a list of all collected results in this report, with selected important summary metrics. It gives you a quick comparison overview across all profiled kernel launches. You can transpose the table of kernels and metrics by using the button.

6.2.3. Details Page

By default, once a new profile result is collected, all applicable rules are applied. Any rule results will be shown as on this page. Most rule results will be purely informative or have a warning icon to indicate some performance problem. Results with error icons typically indicate an error while applying the rule.

If a rule result references another report section, it will appear as a link in the recommendation. Select the link to scroll to the respective section. If the section was not collected in the same profile result, enable it in the Sections/Rules Info tool window.

You can add or edit comments in each section of the view by clicking on the comment button (speech bubble). The comment icon will be highlighted in sections that contain a comment. Comments are persisted in the report and are summarized in the Comments Page.

Besides their header, sections typically have one or more with additional charts or tables. Click the triangle icon in the top-left corner of each section to show or hide those. If a section has multiple bodies, a dropdown in their top-right corner allows you to switch between them.

If enabled, the section contains a Roofline chart that is particularly helpful for visualizing kernel performance at a glance. (To enable roofline charts in the report, ensure that the section is enabled when profiling.) More information on how to use and read this chart can be found in Roofline Charts. NVIDIA Nsight Compute ships with several different definitions for roofline charts, including hierarchical rooflines. These additional rooflines are defined in different section files. While not part of the section set, a new section set called was added to collect and show all rooflines in one report. The idea of hierarchical rooflines is that they define multiple ceilings that represent the limiters of a hardware hierarchy. For example, a hierarchical roofline focusing on the memory hierarchy could have ceilings for the throughputs of the L1 cache, L2 cache and device memory. If the achieved performance of a kernel is limited by one of the ceilings of a hierarchical roofline, it can indicate that the corresponding unit of the hierarchy is a potential bottleneck.

The roofline chart can be zoomed and panned for more effective data analysis, using the controls in the table below.

If enabled, the section contains a Memory chart that visualizes data transfers, cache hit rates, instructions and memory requests. More information on how to use and read this chart can be found in the Kernel Profiling Guide.

Sections such as can contain source hot spot tables. These tables indicate the N highest or lowest values of one or more metrics in your kernel source code. Select the location links to navigate directly to this location in the Source Page. Hover the mouse over a value to see which metrics contribute to it.

6.2.4. Source Page

Overview

The page correlates assembly (SASS) with high-level code and PTX. In addition, it displays metrics that can be correlated with source code. It is filtered to only show (SASS) functions that were executed in the kernel launch.

Source Correlation

The dropdown can be used to select different code (correlation) options. This includes SASS, PTX and Source (CUDA-C), as well as their combinations. Which options are available depends on the source information embedded into the executable.

You can use the (source code) line edit to search the column. Enter the text to search and use the associated buttons to find the next or previous occurrence in this column. While the line edit is selected, you can also use the or keys to search for the next or previous occurrence, respectively.

Only filenames are shown in the view, together with a error, if the source files cannot be found in their original location. This can occur, for example, if the report was moved to a different system. Select a filename and click the button above to specify where this source can be found on the local filesystem. However, the view always shows the source files if the import source option was selected during profiling, and the files were available at that time. If a file is found in its original or any source lookup location, but its attributes don’t match, a error is shown. See the Source Lookup options for changing file lookup behavior.

If the report was collected using remote profiling, and automatic resolution of remote files is enabled in the Profile options, NVIDIA Nsight Compute will attempt to load the source from the remote target. If the connection credentials are not yet available in the current NVIDIA Nsight Compute instance, they are prompted in a dialog. Loading from a remote target is currently only available for Linux x86_64 targets and Linux and Windows hosts.

Metrics Correlation

The heatmap on the right-hand side of each view can be used to quickly identify locations with high metric values of the currently selected metric in the dropdown. The heatmap uses a black-body radiation color scale where black denotes the lowest mapped value and white the highest, respectively. The current scale is shown when clicking and holding the heatmap with the right mouse button.

If a view contains multiple source files or functions, [+] and [-] buttons are shown. These can be used to expand or collapse the view, thereby showing or hiding the file or function content except for its header. If collapsed, all metrics are shown aggregated to provide a quick overview.

Number of registers that need to be kept valid by the compiler. A high value indicates that many registers are required at this code location, potentially increasing the register pressure and the maximum number of register required by the kernel.

The number of samples from the at this program location.

The number of samples from the at this program location on cycles the warp scheduler issued no instructions. Note that samples may be taken on a different profiling pass than samples mentioned above, so their values do not strictly correlate.

This metric is only available on devices with compute capability 7.0 or higher.

Number of times the source (instruction) was executed by any warp.

Number of times the source (instruction) was executed by any active, predicated-on thread. For instructions that are executed unconditionally (i.e. without predicate), this is the number of active threads in the warp, multiplied with the respective value.

Number of divergent branch targets, including fallthrough. Incremented only when there are two or more active threads with divergent targets. Divergent branches can lead to warp stalls due to resolving the branch or instruction cache misses.

| Label | Name | Description |

| Address Space | memory_type | The accessed address space (global/local/shared). |

| Access Operation | memory_access_type | The type of memory access (e.g. load or store). |

| Access Size | memory_access_size_type | The size of the memory access, in bits. |

| L1 Tag Requests Global | memory_l1_tag_requests_global | Number of L1 tag requests generated by global memory instructions. |

| L1 Wavefronts Shared Excessive | derived__memory_l1_wavefronts_shared_excessive | Excessive number of wavefronts in L1 from shared memory instructions, because not all not predicated-off threads performed the operation. Note: This is a derived metric which can not be collected directly. |

| L1 Wavefronts Shared | memory_l1_wavefronts_shared | Number of wavefronts in L1 from shared memory instructions. |

| L1 Wavefronts Shared Ideal | memory_l1_wavefronts_shared_ideal | Ideal number of wavefronts in L1 from shared memory instructions, assuming each not predicated-off thread performed the operation. |

| L2 Theoretical Sectors Global Excessive | derived__memory_l2_theoretical_sectors_global_excessive | Excessive theoretical number of sectors requested in L2 from global memory instructions, because not all not predicated-off threads performed the operation. Note: This is a derived metric which can not be collected directly. |

| L2 Theoretical Sectors Global | memory_l2_theoretical_sectors_global | Theoretical number of sectors requested in L2 from global memory instructions. |

| L2 Theoretical Sectors Global Ideal | memory_l2_theoretical_sectors_global_ideal | Ideal number of sectors requested in L2 from global memory instructions, assuming each not predicated-off thread performed the operation. |

| L2 Theoretical Sectors Local | memory_l2_theoretical_sectors_local | Theoretical number of sectors requested in L2 from local memory instructions. |

Several of the above metrics on memory operations were renamed in version 2021.2 as follows:

| Old name | New name |

| memory_l2_sectors_global | memory_l2_theoretical_sectors_global |

| memory_l2_sectors_global_ideal | memory_l2_theoretical_sectors_global_ideal |

| memory_l2_sectors_local | memory_l2_theoretical_sectors_local |

| memory_l1_sectors_global | memory_l1_tag_requests_global |

| memory_l1_sectors_shared | memory_l1_wavefronts_shared |

| memory_l1_sectors_shared_ideal | memory_l1_wavefronts_shared_ideal |

All metrics show the information combined in individually. See Statistical Sampler for their descriptions.

Register Dependencies

Dependencies between registers are displayed in the SASS view. When a register is read, all the potential addresses where it could have been written are found. The links between these lines are drawn in the view. All dependencies for registers, predicates, uniform registers and uniform predicates are shown in their respective columns.

Dependencies across source files and functions are not tracked.

The Register Dependencies Tracking feature is enabled by default, but can be disabled completely in

6.2.5. Comments Page

The page aggregates all section comments in a single view and allows the user to edit those comments on any launch instance or section, as well as on the overall report. Comments are persisted with the report. If a section comment is added, the comment icon of the respective section in the Details Page will be highlighted.

6.2.6. Call Stack / NVTX Page

The section of this report page shows the CPU call stack for the executing CPU thread at the time the kernel was launched. For this information to show up in the profiler report, the option to collect CPU call stacks had to be enabled in the Connection Dialog or using the corresponding NVIDIA Nsight Compute CLI command line parameter.

The section of this report page shows the NVTX context when the kernel was launched. All thread-specific information is with respect to the thread of the kernel’s launch API call. Note that NVTX information is only collected if the profiler is started with NVTX support enabled, either in the Connection Dialog or using the NVIDIA Nsight Compute CLI command line parameter.

6.2.7. Raw Page

The page shows a list of all collected metrics with their units per profiled kernel launch. It can be exported, for example, to CSV format for further analysis. The page features a filter edit to quickly find specific metrics. You can transpose the table of kernels and metrics by using the button.

6.3. Metrics and Units

Numeric metric values are shown in various places in the report, including the header and tables and charts on most pages. NVIDIA Nsight Compute supports various ways to display those metrics and their values.

By default, units are scaled automatically so that metric values are shown with a reasonable order of magnitude. Units are scaled using their SI-factors, i.e. byte-based units are scaled using a factor of 1000 and the prefixes K, M, G, etc. Time-based units are also scaled using a factor of 1000, with the prefixes n, u and m. This scaling can be disabled in the Profile options.

Metrics which could not be collected are shown as n/a and assigned a warning icon. If the metric floating point value is out of the regular range (i.e. nan (Not a number) or inf (infinite)), they are also assigned a warning icon. The exception are metrics for which these values are expected and which are white-listed internally.

7. Baselines

NVIDIA Nsight Compute supports diffing collected results across one or multiple reports using Baselines. Each result in any report can be promoted to a baseline. This causes metric values from all results in all reports to show the difference to the baseline. If multiple baselines are selected simultaneously, metric values are compared to the average across all current baselines. Note that currently, baselines are not stored with a report and are only available as long as the same NVIDIA Nsight Compute instance is open.

Select to promote the current result in focus to become a baseline. If a baseline is set, most metrics on the Details Page, Raw Page and Summary Page show two values: the current value of the result in focus, and the corresponding value of the baseline or the percentage of change from the corresponding baseline value. (Note that an infinite percentage gain, inf%, may be displayed when the baseline value for the metric is zero, while the focus value is not.)

Hovering the mouse over a baseline name allows the user to edit the displayed name. Hovering over the baseline color icon allows the user to remove this specific baseline from the list.

Use the entry from the dropdown button, the Profile menu, or the corresponding toolbar button to remove all baselines.

8. Standalone Source Viewer

NVIDIA Nsight Compute includes a standalone source viewer for files. This view is identical to the Source Page, except that it won’t include any performance metrics.

Cubin files can be opened from the main menu command. The SM Selection dialog will be shown before opening the standalone source view. If available, the SM version present in the file name is pre-selected. For example, if your file name is mergeSort.sm_80.cubin then SM 8.0 will be pre-selected in the dialog. Choose the appropriate SM version from the drop down menu if it’s not included in the file name.

9. Occupancy Calculator

NVIDIA Nsight Compute provides an that allows you to compute the multiprocessor occupancy of a GPU for a given CUDA kernel. It offers feature parity to the CUDA Occupancy Calculator spreadsheet.

Select the Occupancy Calculator activity from the connection dialog. You can optionally specify an occupancy calculator data file, which is used to initialize the calculator with the data from the saved file. Click the button to open the Occupancy Calculator.

The user interface consists of an input section as well as tables and graphs that display information about GPU occupancy. To use the calculator, change the input values in the input section, click the button and examine the tables and graphs.

9.1. Tables

The tables show the occupancy, as well as the number of active threads, warps, and thread blocks per multiprocessor, and the maximum number of active blocks on the GPU.

9.2. Graphs

The graphs show the occupancy for your chosen block size as a blue circle, and for all other possible block sizes as a line graph.

9.3. GPU Data

The shows the properties of all supported devices.

10. Options

10.1. Profile

10.2. Environment

10.3. Connection

Target Connection Properties

| Name | Description | Values |

|---|---|---|

| Base Port | Base port used to establish a connection from the host to the target application during an activity (both local and remote). | 1-65535 (Default: 49152) |

| Maximum Ports | Maximum number of ports to try (starting from ) when attempting to connect to the target application. | 2-65534 (Default: 64) |

Host Connection Properties

| Name | Description | Values |

|---|---|---|

| Base Port | Base port used to establish a connection from the command line profiler to the host application during a activity (both local and remote). | 1-65535 (Default: 50152) |

| Maximum Ports | Maximum number of ports to try (starting from ) when attempting to connect to the host application. | 1-100 (Default: 10) |

10.4. Source Lookup

| Name | Description | Values |

|---|---|---|

| Program Source Locations | Set program source search paths. These paths are used to resolve CUDA-C source files on the Source page if the respective file cannot be found in its original location. Files which cannot be found are marked with a error. See the option for files that are found but don’t match. | |

| Ignore File Properties | Ignore file properties (e.g. timestamp, size) for source resolution. If this is disabled, all file properties like modification timestamp and file size are checked against the information stored by the compiler in the application during compilation. If a file with the same name exists on a source lookup path, but not all properties match, it won’t be used for resolution (and a error will be shown). | Yes/No (Default) |

10.5. Send Feedback

11. Projects

Note that only references to reports or other files are saved in the project file. Those references can become invalid, for example when associated files are deleted, removed or not available on the current system, in case the project file was moved itself.

NVIDIA Nsight Compute uses the ncu-proj file extension for project files.

11.1. Project Dialogs

Creates a new project. The project must be given a name, which will also be used for the project file. You can select the location where the project file should be saved on disk. Select whether a new directory with the project name should be created in that location.

11.2. Project Explorer

The window allows you to inspect and manage the current project. It shows the project name as well as all (profile reports and other files) associated with it. Right-click on any entry to see further actions, such as adding, removing or grouping items. Type in the toolbar at the top to filter the currently shown entries.

12. Visual Profiler Transition Guide

12.1. Trace

NVIDIA Nsight Compute does not support tracing GPU or API activities on an accurate timeline. This functionality is covered by NVIDIA Nsight Systems. In the Interactive Profile Activity, the API Stream tool window provides a stream of recent API calls on each thread. However, since all tracked API calls are serialized by default, it does not collect accurate timestamps.

12.2. Sessions

Instead of sessions, NVIDIA Nsight Compute uses Projects to launch and gather connection details and collected reports.

12.3. Timeline

Since trace analysis is now covered by Nsight Systems, NVIDIA Nsight Compute does not provide views of the application timeline. The API Stream tool window does show a per-thread stream of the last captured CUDA API calls. However, those are serialized and do not maintain runtime concurrency or provide accurate timing information.

12.4. Analysis

All trace-based analysis is now covered by NVIDIA Nsight Systems. This means that NVIDIA Nsight Compute does not include analysis regarding concurrent CUDA streams or (for example) UVM events. For per-kernel analysis, NVIDIA Nsight Compute provides recommendations based on collected performance data on the Details Page. These rules currently require you to collect the required metrics via their sections up front, and do not support partial on-demand profiling.

To use the rule-based recommendations, enable the respective rules in the Sections/Rules Info. Before profiling, enable in the Profile Options, or click the button in the report afterward.

All trace-based analysis is now covered by Nsight Systems. For per-kernel analysis, Python-based rules provide analysis and recommendations. See above for more details.

Source-correlated PC sampling information can now be viewed in the Source Page. Aggregated warp states are shown on the Details Page in the section.

Memory Statistics are located on the Details Page. Enable the sections to collect the respective information.

NVLink topology diagram and NVLink property table are located on the Details Page. Enable the and sections to collect the respective information.

Refer to the Known Issues section for the limitations related to NVLink.

Source correlated with PTX and SASS disassembly is shown on the Source Page. Which information is available depends on your application’s compilation/JIT flags.

NVIDIA Nsight Compute does not automatically collect data for each executed kernel, and it does not collect any data for device-side memory copies. Summary information for all profiled kernel launches is shown on the Summary Page. Comprehensive information on all collected metrics for all profiled kernel launches is shown on the Raw Page.

CPU callstack sampling is now covered by NVIDIA Nsight Systems.

OpenACC performance analysis with NVIDIA Nsight Compute is available to limited extent. OpenACC parallel regions are not explicitly recognized, but CUDA kernels generated by the OpenACC compiler can be profiled as regular CUDA kernels. See the NVIDIA Nsight Systems release notes to check its latest support status.

NVIDIA Nsight Compute does not collect CUDA API and GPU activities and their properties. Performance data for profiled kernel launches is reported (for example) on the Details Page.

NVIDIA Nsight Compute does not currently collect stdout/stderr application output.

Application launch settings are specified in the Connection Dialog. For reports collected from the UI, launch settings can be inspected on the Session Page after profiling.

Source for CPU-only APIs is not available. Source for profiled GPU kernel launches is shown on the Source Page.

12.5. Command Line Arguments

13. Visual Studio Integration Guide

13.1. Visual Studio Integration Overview

14. Library Support

NVIDIA Nsight Compute can be used to profile CUDA applications, as well as applications that use CUDA via NVIDIA or third-party libraries. For most such libraries, the behavior is expected to be identical to applications using CUDA directly. However, for certain libraries, NVIDIA Nsight Compute has certain restrictions, alternate behavior, or requires non-default setup steps prior to profiling.

14.1. OptiX

NVIDIA Nsight Compute supports profiling of OptiX applications, but with certain restrictions.

The following feature set is supported per OptiX API version:

| OptiX API Version | Kernel Profiling | API Interception | Resource Tracking |

| 6.x | Yes | No | No |

| 7.0 | Yes | Yes | Yes |

| 7.1 | Yes | Yes | Yes |

| 7.2 | Yes | Yes | Yes |

| 7.3 | Yes | Yes | Yes |

Notices

Notice

ALL NVIDIA DESIGN SPECIFICATIONS, REFERENCE BOARDS, FILES, DRAWINGS, DIAGNOSTICS, LISTS, AND OTHER DOCUMENTS (TOGETHER AND SEPARATELY, «MATERIALS») ARE BEING PROVIDED «AS IS.» NVIDIA MAKES NO WARRANTIES, EXPRESSED, IMPLIED, STATUTORY, OR OTHERWISE WITH RESPECT TO THE MATERIALS, AND EXPRESSLY DISCLAIMS ALL IMPLIED WARRANTIES OF NONINFRINGEMENT, MERCHANTABILITY, AND FITNESS FOR A PARTICULAR PURPOSE.

Information furnished is believed to be accurate and reliable. However, NVIDIA Corporation assumes no responsibility for the consequences of use of such information or for any infringement of patents or other rights of third parties that may result from its use. No license is granted by implication of otherwise under any patent rights of NVIDIA Corporation. Specifications mentioned in this publication are subject to change without notice. This publication supersedes and replaces all other information previously supplied. NVIDIA Corporation products are not authorized as critical components in life support devices or systems without express written approval of NVIDIA Corporation.

Trademarks

NVIDIA and the NVIDIA logo are trademarks or registered trademarks of NVIDIA Corporation in the U.S. and other countries. Other company and product names may be trademarks of the respective companies with which they are associated.