no healthy upstream что это

No Healthy Upstream error when health_check property omitted #1932

Comments

tlhunter commented Oct 24, 2017

This is a bit of a follow up to #1912. I’m currently using only CDS. My CDS endpoint returns information about hundreds services. Here’s a truncated result:

Note that there is no health_check property defined (on any entry) which should mean that health checks are disabled.

However, when I run the application, I notice two things. The first thing is that Envoy takes about 4 minutes before it starts allowing ingress HTTP calls to occur. The output logs which confirm this look like so:

Afterwards, if I make an egress request to the autocomplete service via Envoy, the request will fail. Manually making a cURL request to that same URL will succeed (assuming I replace tcp:// with http:// ). When it fails, Envoy displays the following message:

I am making use of a special Envoy-Discovery-Target header to notify Envoy which discovery service I’m attempting to communicate with. This configuration appears to partly work because when I grep the /stats admin endpoint after three failed egress requests I see the following (all lines with 0 values removed for brevity):

The several minute pause, the upstream_cx_none_healthy: 3 stat, and the envoy process idling at 10% of CPU makes me think that health checking is somehow enabled. Does anyone know these services are listed as unhealthy despite now health checks?

The following is my current configuration:

The text was updated successfully, but these errors were encountered:

Istio Ingress resulting in «no healthy upstream»

I am using deploying an outward facing service, that is exposed behind a nodeport and then an istio ingress. The deployment is using manual sidecar injection. Once the deployment, nodeport and ingress are running, I can make a request to the istio ingress.

For some unkown reason, the request does not route through to my deployment and instead displays the text «no healthy upstream». Why is this, and what is causing it?

I can see in the http response that the status code is 503 (Service Unavailable) and the server is «envoy». The deployment is functioning as I can map a port forward to it and everything works as expected.

4 Answers 4

Although this is a somewhat general error resulting from a routing issue within an improper Istio setup, I will provide a general solution/piece of advice to anyone coming across the same issue.

In my case the issue was due to incorrect route rule configuration, the Kubernetes native services were functioning however the Istio routing rules were incorrectly configured so Istio could not route from the ingress into the service.

Just in case, like me, you get curious. Even though in my scenario it was clear the case of the error.

Ok, even so, I knew the problem and how to fix it (just deploy v1), I was wondering. But, how can I have more information about this error? How could I get a deeper analysis of this error to find out what was happening?

This is a way of investigating using the configuration command line utility of Istio, the istioctl:

Transient 503 UH «no healthy upstream» errors during CDS updates #13070

Comments

kryzthov-stripe commented Sep 11, 2020

Title: Envoy briefly fails to route requests during CDS updates

Description:

When a cluster is updated via CDS, we sometimes observe request failures in the form of HTTP 503 «no healthy upstream» with the UH response flag.

The membership total for the cluster remains constant throughout the update.

The membership healthy falls down to 0 shortly after the CDS update begins, then switches back to normal shortly after the CDS update completed.

Request failures do occur regardless of the panic routing thresholds.

The docs around cluster warming (in https://www.envoyproxy.io/docs/envoy/latest/intro/arch_overview/upstream/cluster_manager#cluster-warming) suggest there should be no traffic disruption during a CDS update.

Repro steps:

We observed and reproduced this behavior on static or EDS clusters, by sending a CDS update to change the health-checking timeout (eg. from 5s to 10s and back).

For example, we used an EDS cluster with 1% panic routing threshold configured around the lines of:

The cluster load assignment included a stable pool of 10 healthy backends.

Envoy does not appear to make any health-check request during the CDS update.

The backends are hard-coded to report healthy, and run locally on the same host to minimize possible network interferences.

Logs:

We’ve reproduced this using a version of Envoy 1.14.5-dev with some extra log statements:

Based on the trace above, it looks like a load-balancer of the updated cluster is used to route a request before its underlying host set is initialized.

I’m not familiar enough with Envoy internals and could use some help to understand if I’m somehow misconfiguring Envoy, or if something else is happening.

The text was updated successfully, but these errors were encountered:

Virtual Geek

Tales from real IT system administrators world and non-production environment

VMware vCenter server Error no healthy upstream

After completing VMware vCenter server appliance (vcsa) installation and configuration, when tested vCenter UI url https://vcenter:443/ui, I was receiving below error. I restarted vCenter server vcsa multiple times, checked if DNS entries (A host record and PTR pointer record) exists in DNS server for vCenter server, but this error didn’t go away.

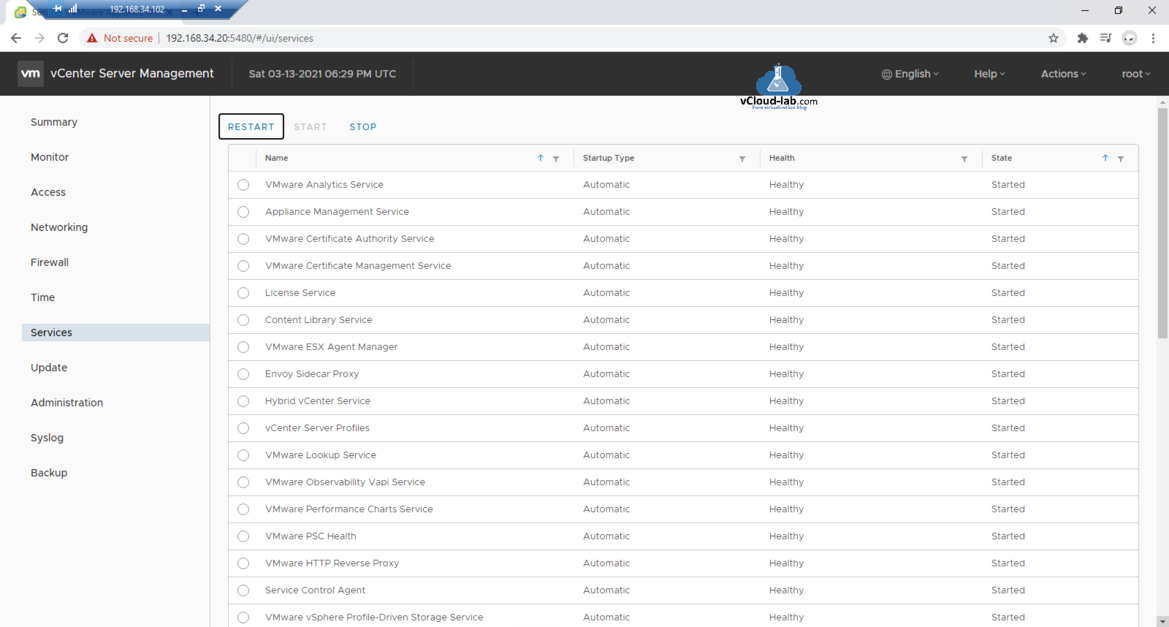

To resolve the issue further I checked all the services status, under vCenter server management 5480 vami portal, Restarted couple of services, Checked server health in Monitor, all was good, but still no luck.

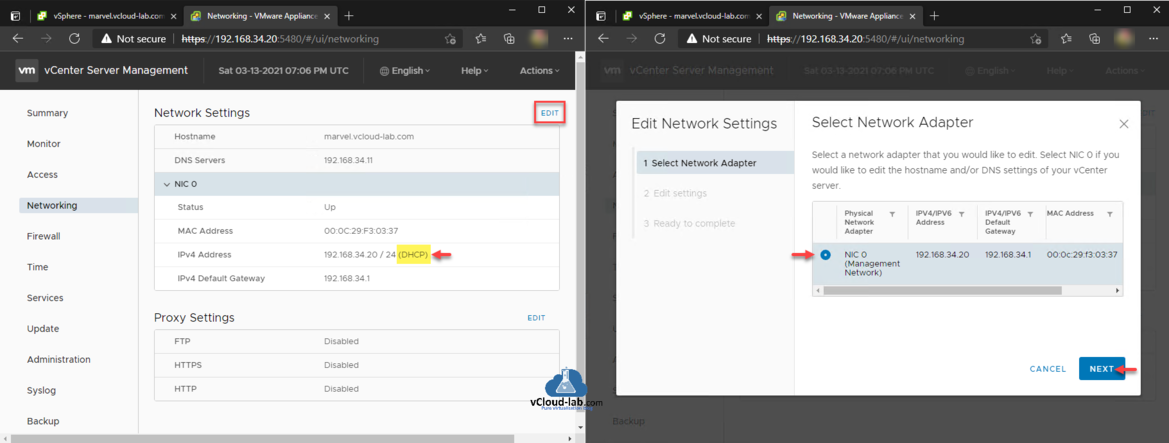

Next I found one of the settings which I configured and documented in Unable to save IP settings Install Stage 2 Set up vCenter Server configuration where I had configured vCenter server to obtain IP address from DHCP. I reverted IP address setting from DHCP to static with below steps. Before making any changes make sure you backup vCenter server or take snapshot.

Log in to VMware Server Managment vami portal with 5480 port, go to Networking section and click EDIT. I have only one network adapter and it already selected, click Next.

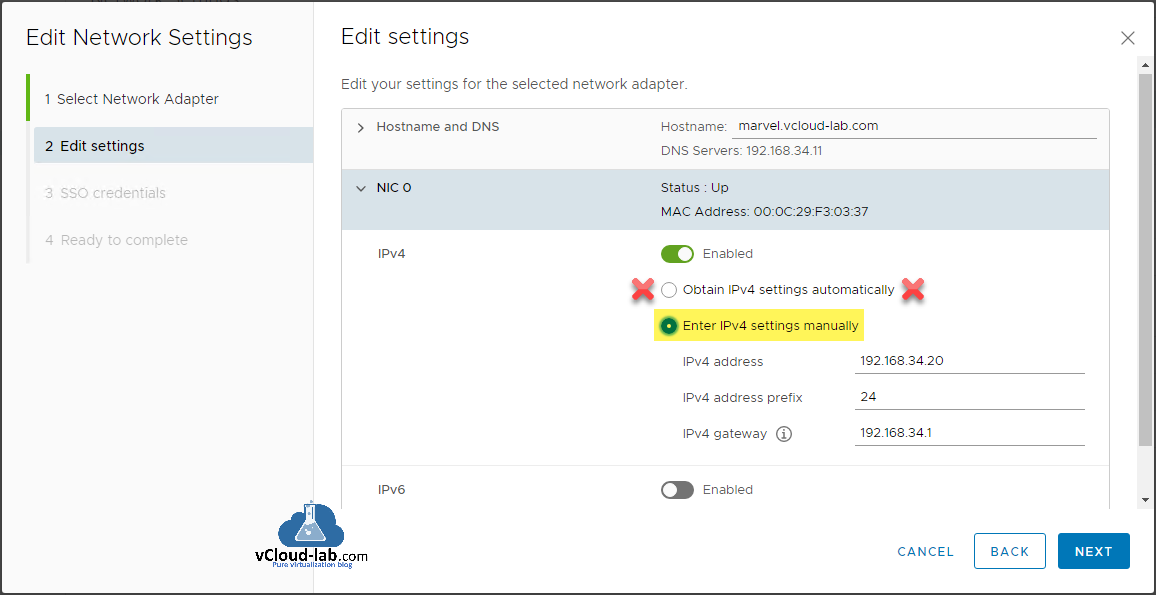

In the Edit Settings change the setting from Obtain IPv4 settings automatically to Enter IPv4 settings manually and click Next button.

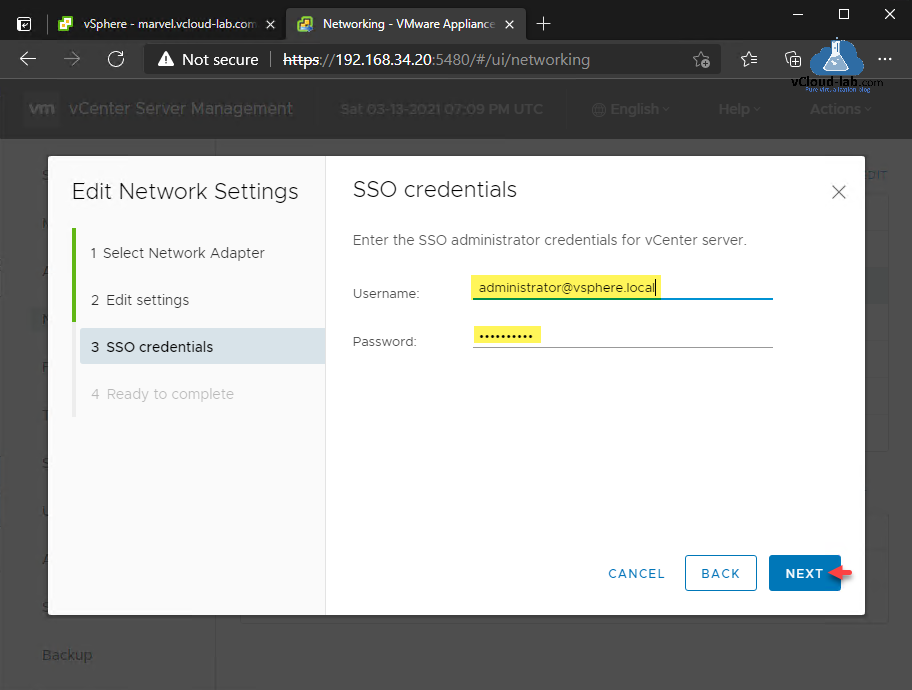

Provide SSO administrator credentials for vCenter server click Next button. (Username: administrator@vsphere.local).

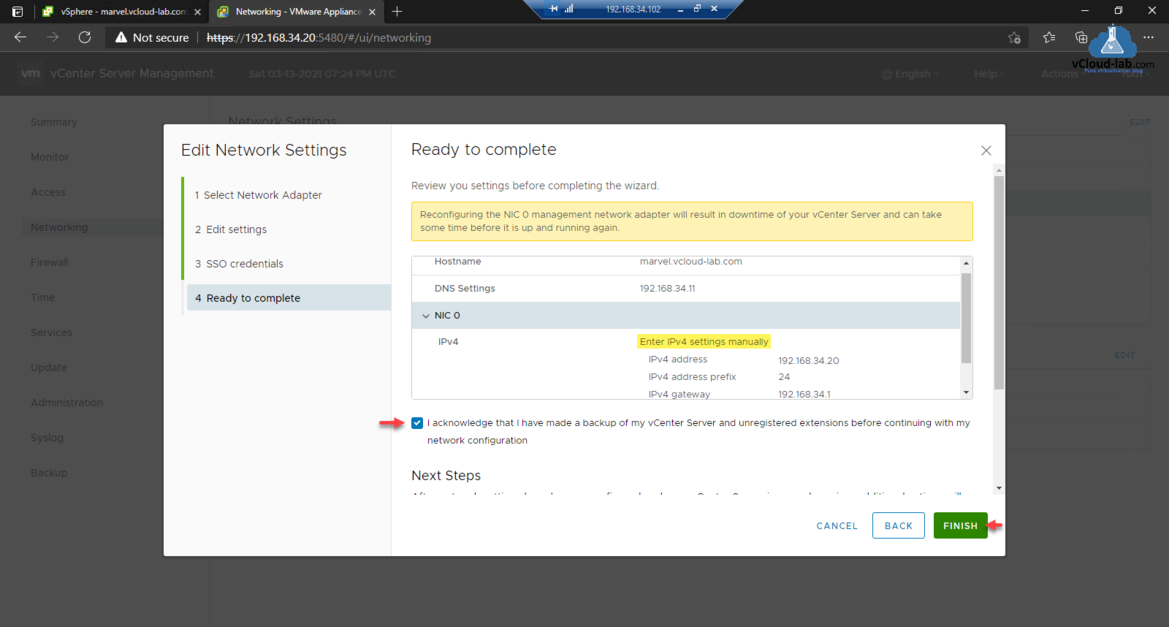

In last, Ready to complete page verify the settings to be done. Click the checkbox to acknowledge that you have made a backup of your vCenter server and unregistered extensions before continuing with your network configuration.

After network settings have been reconfigured and your vCenter server is up and running, additional actions will be required.

1. All deployed plug-ins will need to be reregistered.

2. All custom certififcates will need to be regenerated.

3. vCenter HA will need to be reconfigured.

4. Hybrid Link with Cloud vCenter server will need to be recreated.

5. Active Directory will need to be rejoined.

Click Finish button.

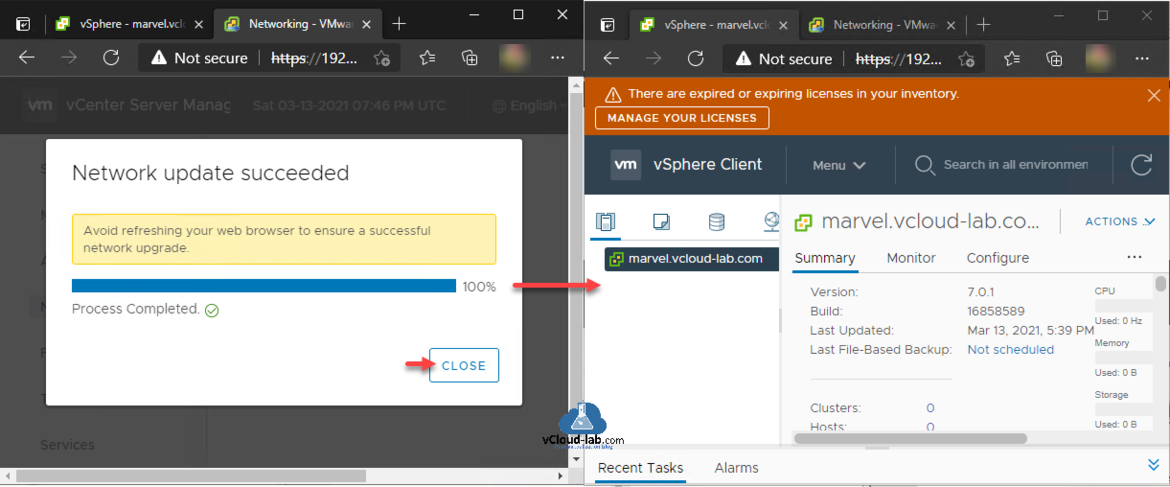

Avoid refreshing your web browser to ensure a successful network upgrade. Once update is 100% completed close the window and test vCenter server url https://vcenter/ui. All looks good.

If you want to change the same gui setting through vCenter Server SSH. Open shell and change DHCP=no in the file /etc/systemd/network/10-eth0.network as shown on Unable to save IP settings Install Stage 2 Set up vCenter Server configuration.

All sidecars reported 503 “ no healthy upstream ” for egress cluster #10515

Comments

wansuiye commented Dec 17, 2018 •

the istio cluster run well for serveral days, however yesterday all sidecar report 503 error»no healthy upstream» for egress clusters suddenly.

the sidecar could receive the xds config and the config downloaded from «istioctl pc xxx» is also synced.

i exec command «istioctl pc endpoint podxxx», the host are all registry host, found no egress host.

meanwhile the new pod have all the host that can work well

i checked the pilot logs and founded three types of warn logs:

1)the logs occured before the first 503 error occured randomly, and disappeared after this up to now.

2)the logs occured randomly

3)the log occured randomly

today the new pod can work well, however the old pod also report 503.

Expected behavior

<< What did you expect to happen? >>

pod can request the egress cluster well.

Environment

<< Which environment, cloud vendor, OS, etc are you using? >>

kubernetes

Cluster state

<< If you're running on Kubernetes, consider following the

instructions

to generate «istio-dump.tar.gz», then attach it here by dragging and dropping

the file onto this issue. >>

The text was updated successfully, but these errors were encountered:

linsun commented Dec 17, 2018

what is the pod/deployment status for your egress, is it possible it is crashed? Do you have HA configured for your egress deployment?

linsun commented Dec 17, 2018

@vadimeisenbergibm can you help triage this?

vadimeisenbergibm commented Dec 17, 2018

wansuiye commented Dec 18, 2018 •

what is the pod/deployment status for your egress, is it possible it is crashed? Do you have HA configured for your egress deployment?

@linsun Thank you for your reply, the egress instance is all healthy and the new pod can receive them. there is no HA configured for the egress instances. we have many egress config and they are all reported «no healthy upstream»

vadimeisenbergibm commented Dec 18, 2018

@wansuiye You are accessing an external service by HTTP, thru an egress gateway, correct?

Could you please configure egress gateway according to https://istio.io/docs/examples/advanced-egress/egress-gateway/#define-an-egress-gateway-and-direct-http-traffic-through-it and see if it works? Alternatively, can you publish your existing egress configuration?

Additional diagnosing steps, if you can publish the output here:

wansuiye commented Dec 18, 2018 •

@vadimeisenbergibm hi, we are accessing services by HTTP/TCP directly, not using a egress gateway.

today i found something strange:

The old pod that report error cannot connect to pilot when i restart pilot, so command «istioctl proxy-status pod» only show the new pod. they can connect to pilot yesterday.

And the new pod cannot connect to the new egress(report 404) that i create today for test, although they can sync the config from pilot and the new egress config can be found from the result of “istioctl proxy-config clusters/route/endpoint newpod”.

Because we have a lot of egress configurations in a production environment, I just paste in only part of the configurations, for which i make minor changes.

the egress configuration:

egress.txt

duderino commented Dec 18, 2018

@vadimeisenbergibm please assign a priority to this

vadimeisenbergibm commented Dec 19, 2018

vadimeisenbergibm commented Dec 19, 2018

@wansuiye So it seems you have two issues:

The problem 2 is a simpler one, so let’s debug it first. I propose that you define egress to some public url, e.g. httpbin.org like in https://preliminary.istio.io/docs/tasks/traffic-management/egress/#configuring-istio-external-services, and see if it works. If it does not work, please publish your configuration, the log/listeners/clusters of the sidecar proxy of the pod and the command you use to call the external service.

wansuiye commented Dec 19, 2018 •

hi, i test the problem 2, it report 404:

the config is set as the wiki:

the clusters of the proxy:

the endpoint of the proxy:

the route of httpbin is:

vadimeisenbergibm commented Dec 19, 2018 •

@wansuiye The listener looks OK, according to the log of the proxy the request was successfully forwarded to 34.238.3.58 which is an IP of httpbin.org. Could it be that you have some firewall that blocks traffic to httpbin.org? Can you run the same curl command from the sidecar proxy of the same pod? You are using Istio 1.0.3, right?

wansuiye commented Dec 20, 2018 •

@vadimeisenbergibm from the proxy, the command return 200, other new egress are also can not be accessed by the the pod, so it’s not the problem of environment.

yeah, we are using istio 1.0.3

vadimeisenbergibm commented Dec 20, 2018

So the problem is that the routing was somehow not performed, the listener definition you pasted did not take effect.

There is something in listeners’ definition that confuses Envoy and it does not perform the routing, maybe some conflict on port 80.

Do you see maybe some conflicting listeners on port 80?